When running unit test, you are not supposed to be checking the logs, generated by the test framework. One of the first principles of the test driving development paradigm is, that you should be able to run your tests automatically, without any developer interaction. You also should not be forced to check any output besides the running result. Also you should never check the log, to be able to decide if running the test was successful.

So long the theory.

In fact, sometimes it is very helpful, if you can analyse logging from your unit test. As you are working test driven, you use the unit test runner also for developing the code. In order to get a closer view to the process you are implementing, during the development phase, it can spare a lots of time and debugging, if you can have a look at the logs.

The problem is, that you do not want the change the log level in your code, to let JUnit process to log your debug or trace level logs. As JUnit is running with default INFO level (depending on the actual logging implementation you are using), you need to find a way to change the logging level fast.

Change the logging level in Eclipse

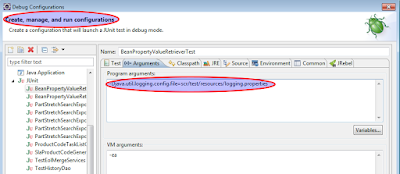

You can define a custom log configuration file for your JUnit runner-Djava.util.logging.config.file=scr/test/resources/logging.properties

To define a custom log settings file, you need to open the actual run configuration, and add the path of the file as program argument.

Although this method is handy to use, when you have a central run configuration, and you want to run it several time during your development, in most cases it is inconvenient. You need to define the same run-time argument for all run or debug configuration, which makes the development slow.

Set log level programmatically

Thanks to the API of logging frameworks, it is possible to set the log level dynamically in your code. The @Before method is just a right place for it. Unfortunately you need to remember slightly different API calls for different logging frameworks, but if you stick to one, it is not a huge problem.

Here you can see implementation for Log4J and Slf4j, the two most used by me.

As you can see, once you got a reference to the root logger, you are free to change any settings the given API allows to you.

Please consider, that logging on such a wide scale is usually unnecessary after the development phase. I usually use this possibility only when creating new features, and remove it or comment it out after the code works as desired.